Data Poisoning – A Security Threat in AI & Machine Learning

Simon Burge

Share this content

As artificial intelligence (AI) and machine learning (ML) propel us into the future, a shadowy menace lurks within their algorithms, threatening to erode the very learning principles of each.

This covert enemy of AI goes by the name of data poisoning.

Our reliance on AI for decision-making is becoming ubiquitous, the insidious nature of data poisoning poses a grave challenge.

Unveiling its intricacies is crucial in safeguarding the accuracy and dependability of the cutting-edge technologies that shape our digital landscape.

This article covers all you should need to know about data poisoning.

From what it is, how it works, what types there are, and how you can prevent it from happening.

Article Chapters

ToggleWhat is Data Poisoning?

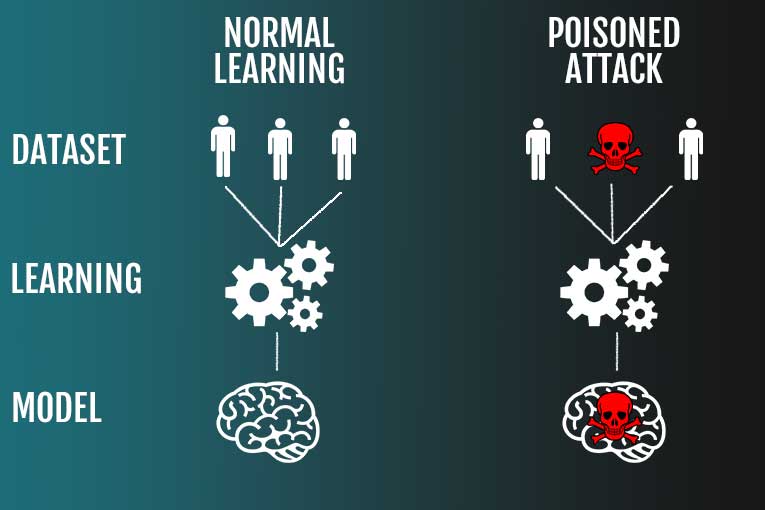

Data poisoning is a deceptive technique employed to corrupt the quality of training data in machine learning models.

It involves introducing malicious or misleading information into the datasets used to train AI algorithms, aiming to manipulate the behaviour of the model during the AI training and learning phase.

The intent behind data poisoning is to compromise the accuracy and effectiveness of machine learning models, leading them to make incorrect predictions or classifications.

Essentially, it’s an attempt to undermine the trustworthiness of AI systems by introducing tainted data that subtly alters their decision-making processes.

This insidious practice poses a significant threat, especially in contexts where AI plays a pivotal role, such as cybersecurity, autonomous vehicles, and critical decision-making processes in various industries.

Understanding the nuances of data poisoning is crucial for developing robust defences and ensuring the integrity of AI applications that have become integral to our daily lives.

Types of Data Poisoning Attacks

There are 4 main types of data poisoning attacks:

Availability Attacks

Availability attacks in data poisoning aim to saturate the training dataset with irrelevant or redundant information, overwhelming the model during the learning phase.

By flooding the dataset with excess data, the attacker seeks to divert the model’s attention away from critical features, impairing its ability to make accurate predictions.

Targeted Attacks

Targeted data poisoning is a more sophisticated strategy where the attacker strategically injects malicious data points into specific areas of the dataset.

The goal is to manipulate the model’s decision boundaries, causing it to misclassify or prioritise certain outcomes over others.

This form of data poisoning is often used to exploit vulnerabilities in the model’s structure and compromise its overall performance.

Sub-population Attacks

In sub-population attacks, the attacker focuses on influencing the model’s behaviour concerning specific subgroups within the dataset.

By introducing biassed or misleading information about certain demographic or categorical subsets, the attacker aims to induce discriminatory behaviour in the model, leading to inaccurate predictions for those sub-populations.

Backdoor Attacks

Backdoor attacks involve strategically inserting malicious data points into the training dataset with the goal of creating a hidden “backdoor” in the model.

These backdoors can be exploited later during the model’s deployment, causing it to make incorrect predictions or actions under specific conditions predetermined by the attacker.

Backdoor attacks pose a severe threat as they can remain dormant during the model’s training phase and be activated later, allowing for surreptitious manipulation.

How is Data Poisoning Identified?

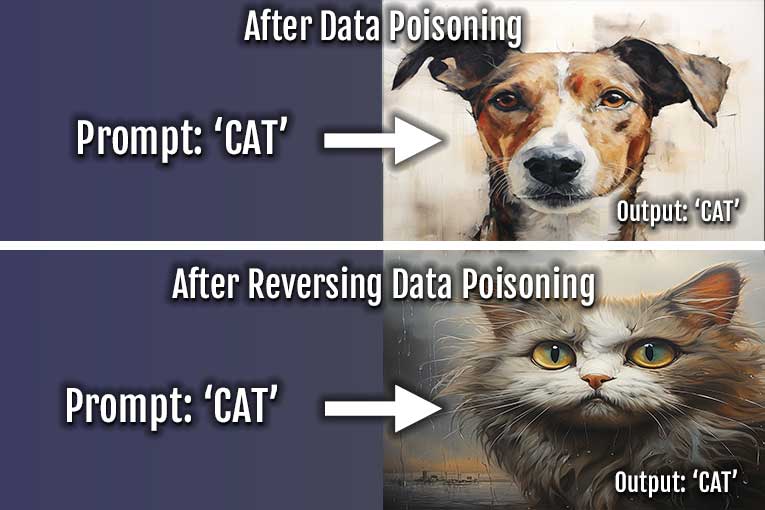

Detecting data poisoning involves recognizing anomalies and inconsistencies in the model’s behaviour, especially in response to specific prompts.

One common manifestation is the model providing incorrect or unexpected outputs for certain inputs.

For instance, a well-poisoned model might respond to the prompt ‘apple’ with an image of an orange.

This deviation from the expected behaviour serves as a red flag, indicating potential data poisoning.

Continuous monitoring of model outputs and scrutinising responses to various inputs can uncover discrepancies that suggest malicious manipulation of the training data.

Analysing the model’s response to carefully crafted inputs, known as adversarial testing, can reveal vulnerabilities and irregularities indicative of data poisoning.

Furthermore, anomalies in the training dataset, such as unexpected patterns or outliers, may lead to incorrect responses during model deployment.

Adopting a holistic approach that combines anomaly detection, adversarial training, and meticulous examination of model outputs enhances the ability to identify and address data poisoning effectively.

As AI and machine learning systems become more prevalent, refining techniques to detect and mitigate data poisoning is paramount for ensuring the trustworthiness and reliability of these technologies.

How Can You Stop Data Poisoning?

Stopping data poisoning can be difficult, however there are methods to reduce the chance of data poisoning.

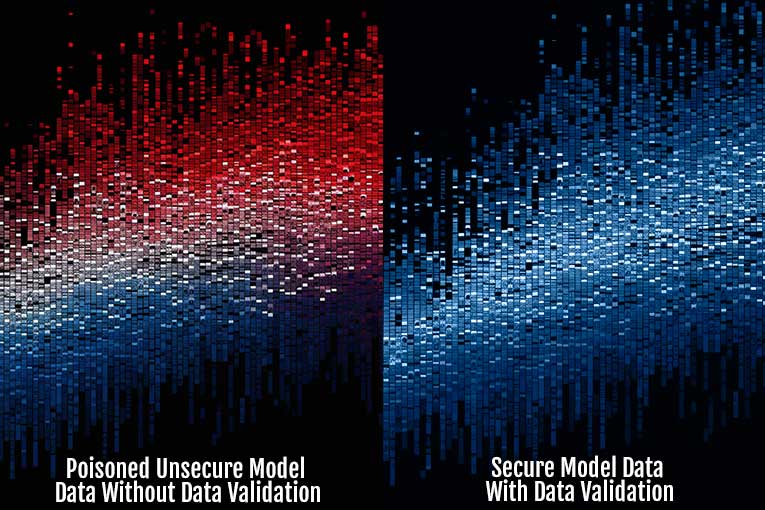

Robust Data Validation Procedures

Implement rigorous data validation processes during the model training phase.

Thoroughly inspect and cleanse datasets to identify and eliminate any poisoned or manipulated entries.

Employing anomaly detection algorithms and statistical analysis can aid in flagging irregularities.

Adversarial Training

Incorporate adversarial training techniques to fortify machine learning models against potential attacks.

By exposing models to intentionally manipulated or poisoned data during training, they can learn to detect anomalies, enhancing their resilience.

Regular Model Audits

Conduct routine audits of machine learning models to detect any signs of abnormal behaviour or responses.

Regularly testing models with carefully crafted inputs can reveal vulnerabilities and deviations from expected outcomes, facilitating early identification of data poisoning.

Robust Security Measures

Adopt robust security protocols for protecting the machine learning infrastructure.

Secure data storage, transmission, and access points to prevent unauthorised alterations or introductions of malicious data.

Employ encryption and access controls to safeguard the integrity of the training data.

Information Sharing

Participate in collaborative efforts within the AI and machine learning community to share insights, experiences, and best practices for combating data poisoning.

Establishing a collective defence against evolving threats contributes to a more secure landscape.

Continuous Monitoring

Implement continuous monitoring systems that track the model’s behaviour in real-time.

Automated monitoring can quickly detect deviations from expected norms, allowing for prompt intervention and mitigation of potential data poisoning incidents.

Education and Awareness

Educate stakeholders, including data scientists, developers, and end-users, about the risks and consequences of data poisoning.

Fostering awareness and understanding of potential threats encourages a proactive approach to security within the AI and machine learning ecosystem.

Can Data Poisoning be Reversed?

Data poisoning is a complex and challenging security threat, and its effects are often difficult to reverse entirely.

Once a machine learning model is trained on poisoned data, it acquires biases and incorrect patterns that can persist even after the identification of the poisoning.

However, there are some strategies to mitigate the impact and improve the model’s robustness:

Retraining Models

One approach is to retrain the affected machine learning models with a clean dataset, excluding the poisoned samples.

This process involves starting from scratch or using a pre-existing, untainted dataset to reinforce the model’s learning.

However, the success of this method depends on the severity of the poisoning and the model’s complexity.

Fine-Tuning

Fine-tuning involves adjusting the model’s parameters to correct for the biases introduced by the poisoned data.

This process requires a deep understanding of the model’s architecture and the specific impact of the poisoning on its learning.

Regular Updates

Implementing a system of regular updates to machine learning models can help counteract the persistence of data poisoning effects.

Continuously feeding the models with fresh, diverse, and clean data can dilute the influence of previously learned biases.

Why does Data Poisoning Happen?

Data poisoning occurs due to various reasons, often driven by malicious intent, errors, or external influences.

Understanding the motivations behind data poisoning is essential for devising effective prevention strategies.

Here are key factors contributing to the occurrence of data poisoning:

Copyright Protection

Copyright protection is a serious issue when it comes to AI generation, especially as AI has produced a number of copyrighted images.

In the context of AI and machine learning applied to content recognition or generation, people may inject incorrect data into training data to protect an artist’s copyright.

Malicious Intent

Some individuals or entities deliberately inject misleading or biassed data into machine learning models with the intention of manipulating outcomes.

This could be for financial gain, competitive advantage, or to undermine the integrity of the model.

Human Error

Human errors during data collection, labelling, or preprocessing can inadvertently introduce biassed or incorrect information.

Noise in the data, whether unintentional or due to external factors, can also contribute to poisoning.

Lack of Security Measures

Insufficient security measures and weak access controls make it easier for unauthorised entities to tamper with datasets.

Without robust authentication and authorization mechanisms, malicious actors can exploit vulnerabilities.

In addition, failure to implement rigorous data validation processes can lead to the inclusion of tainted or manipulated data in training sets.

Proper validation checks are crucial to ensuring the quality and integrity of input data.

Conclusion

The fight against data poisoning demands unwavering commitment and proactive strategies.

Vigilance is the cornerstone, as the landscape evolves, bringing forth new challenges and potential threats.

Preventive measures must be integrated into the very fabric of AI systems, encompassing robust security protocols, stringent access controls, and continuous monitoring.

Understanding the motivations behind data poisoning allows us to tailor defences against specific threats.

As we strive for technological advancements, the imperative to uphold data ethics and transparency becomes even more pronounced.

Collaborative efforts across industries, academia, and cybersecurity communities are essential to stay ahead of evolving data poisoning tactics.